.png)

Business Area

Advanced AI agents,

together with DIA NEXUS.

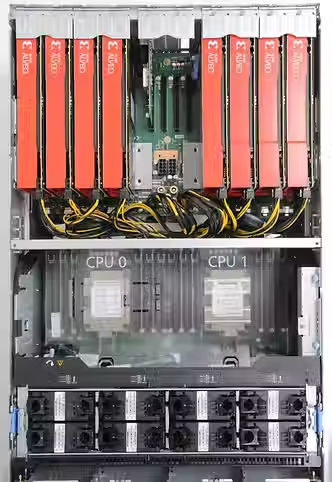

Demand for AI models is becoming increasingly diverse. Rather than relying on a single large model, the trend is shifting toward using a variety of models based on specific use cases. DAEWON CTS is leading this trend by offering AI accelerator–based appliances—leveraging GPUs, NPUs, LPUs, and VPUs—that deliver GPU-class performance with superior cost efficiency for specialized workloads.

Model + AI Accelerator

Consulting

We propose the optimal combination of AI models and accelerators based on requirements and operating conditions.

Performance

Performance

We provide continuous performance optimization strategies after system deployment.

Sizing

Sizing

We define AI appliance specifications tailored to the customer’s AI strategy.

Tech Support

Tech Support

We provide one-stop, full-stack AI technical support from hardware to software.

.png)

Why Choose Us

Why DIA NEXUS

is unique.

Customized proposals

Based on a deep understanding of diverse accelerator (xPU) technologies—including NPUs, LPUs, and GPUs—we design hardware configurations best suited to customer workloads. Rather than relying on a single type of chip for all tasks, we combine specialized processors for each workload to maximize performance and efficiency. This optimization capability delivers competitive advantages not only in performance but also in power and cost efficiency.

Single point of contact

DAEWON CTS provides integrated solutions covering both hardware and software within a single company. As a result, customers can receive technical support through a single point of contact without the need to coordinate multiple vendors. DAEWON CTS delivers end-to-end services, taking responsibility from design and deployment to optimization and maintenance.

Partner Ecosystem

DIA NEXUS

Partner Ecosystem

DAEWON CTS is expanding partnerships with global IT companies to propose tailored solutions that enhance AI infrastructure investment efficiency and resource utilization in line with diverse customer requirements.

Powerful hardware specifications

Featuring a parallel architecture composed of 16 LPUs (Latency Processing Units), a total of 256GB of HBM memory with 7.36TB/s bandwidth, and 144K DSP compute resources, it delivers powerful performance capable of running ultra-large models with up to 100 billion parameters.

High-speed processing technology

Orion incorporates an AI inference–optimized architecture featuring SMA (Streamlined Memory Access) and SXE (Streamlined Execution Engine). By perfectly balancing memory bandwidth and compute resources, it maximizes system resource utilization and enables efficient use of up to 90% of actual memory bandwidth.

Excellent scalability and low latency

HyperAccel’s ESL (Expandable Synchronization Link) technology tightly connects multiple LPUs to minimize synchronization latency across devices. Data is exchanged with the next LPU immediately upon computation completion, accelerating parallel processing, and delivering excellent scale-out characteristics with near-linear performance growth as additional nodes are added.

HyperAccel’s Orion AI appliance is designed around dedicated processors optimized for AI inference. By leveraging inference-specialized accelerators, it achieves a balance between high performance and energy efficiency that is difficult to attain with general-purpose GPUs.

.png)